I recently upgraded most of my FreePBX systems to the latest versions of the firewall to take advantage of what finally seems like a fixed approach for Let’s Encrypt certs. However, I started getting high CPU usage alerts on the machines where I switched on the fail2ban firewall sync. It seems to be coming from a number of fail2ban commands that run on some type of schedule, perhaps cron. When I switched back to legacy, the CPUs took it down a notch. Obviously, syncing this data would require some cpu, but not on the magnitude I have been experiencing. I was digging around and saw some people had cpu problems loading/reading large fail2ban logs. This seems unlikely to be the culprit, but I thought I’d throw it out there. Has anyone else experienced these problems?

you say a number of fail2ban comands, please expand as fail2ban is single threaded

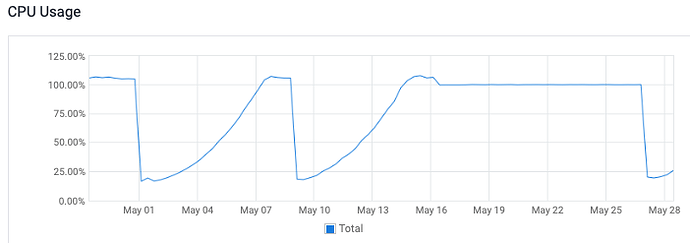

I saw them while watching htop. I reenabled the feature this morning to try to grab a screenshot, but they don’t seems to be showing up in htop right now. This is might be related to the fact that the cpu utilization slowly increases over the course of a few days. Here is a graph of the cpu utilization from a server with sync enabled.

The first drop to normal happened after a reboot. The second drop came after I switched it back to legacy. It might take a day or two for the cpu to get high enough for me to be able to get the specific commands that were showing up in htop.

But where are the fail2ban commands?

ps -aux|grep fail2ban

They aren’t showing up right now. I’m thinking that they will show up later today or tomorrow as the cpu utilization begins to climb. They would use a lot of cpu for just a few seconds, then they would go away for a minute or two, only to reappear. The only “commands” I’m getting right now are:

root 10117 0.0 0.0 112708 988 pts/0 R+ 11:58 0:00 grep --color=auto fail2ban

root 11640 0.1 0.5 854236 10052 ? Sl Dec30 1:15 /usr/bin/python /usr/bin/fail2ban-server -b -s /var/run/fail2ban/fail2ban.sock -p /var/run/fail2ban/fail2ban.pid -x

But this is not representative of what was showing up when the cpu was overloaded. My recollection is that they were jail commands, but I will update here when I see them.

Hi

we have the same on all pbx where we start the sync

We can see in monitoring a “paralel” increase of ram and load everage and everey 3-5 Minutes a lot of fail2ban_clients running

3235 root 20 0 183820 10020 3444 R 38.4 0.5 0:01.16 fail2ban-client

3300 root 20 0 182376 8584 3444 R 32.8 0.4 0:00.99 fail2ban-client

3826 root 20 0 1241480 21332 1268 R 30.8 1.0 86:59.15 fail2ban-server

3252 root 20 0 182364 8568 3444 R 30.1 0.4 0:00.91 fail2ban-client

3257 root 20 0 182356 8556 3444 R 28.1 0.4 0:00.85 fail2ban-client

3280 root 20 0 182356 8564 3444 R 27.8 0.4 0:00.84 fail2ban-client

3267 root 20 0 182344 8548 3444 R 24.8 0.4 0:00.75 fail2ban-client

3316 root 20 0 182336 8544 3444 R 22.8 0.4 0:00.69 fail2ban-client

3274 root 20 0 182336 8544 3444 R 22.2 0.4 0:00.67 fail2ban-client

3291 root 20 0 182324 8536 3444 R 19.9 0.4 0:00.60 fail2ban-client

3312 root 20 0 182192 8268 3444 R 19.5 0.4 0:00.59 fail2ban-client

3340 root 20 0 182192 8276 3444 R 14.2 0.4 0:00.43 fail2ban-client

3334 root 20 0 182192 8236 3436 R 12.9 0.4 0:00.39 fail2ban-client

3347 root 20 0 182192 8272 3444 R 5.6 0.4 0:00.17 fail2ban-client

3357 root 20 0 182192 8276 3444 R 2.6 0.4 0:00.08 fail2ban-client

3366 root 20 0 182192 8276 3444 R 2.3 0.4 0:00.07 fail2ban-client

After return back to legacy option no multi fail2ban-client and High cpu

b

Me too, when I enabled sync the CPU jump to 100.

I run ps -e -o command | grep fail2ban | sort | uniq

This is the result (I filteres some of the public IPs, I think it’s all the IPs that are defined in the firewall as trusted or local)

sh -c /usr/bin/fail2ban-client get apache-tcpwrapper ignoreip | grep - | cut -d' ' -f2 | uniq

sh -c /usr/bin/fail2ban-client get asterisk-iptables ignoreip | grep - | cut -d' ' -f2 | uniq

sh -c /usr/bin/fail2ban-client get pbx-gui ignoreip | grep - | cut -d' ' -f2 | uniq

sh -c /usr/bin/fail2ban-client get recidive ignoreip | grep - | cut -d' ' -f2 | uniq

sh -c /usr/bin/fail2ban-client get ssh-iptables ignoreip | grep - | cut -d' ' -f2 | uniq

sh -c /usr/bin/fail2ban-client get vsftpd-iptables ignoreip | grep - | cut -d' ' -f2 | uniq

/usr/bin/python /usr/bin/fail2ban-client get apache-badbots ignoreip

/usr/bin/python /usr/bin/fail2ban-client get apache-tcpwrapper ignoreip

/usr/bin/python /usr/bin/fail2ban-client get asterisk-iptables ignoreip

/usr/bin/python /usr/bin/fail2ban-client get pbx-gui ignoreip

/usr/bin/python /usr/bin/fail2ban-client get recidive ignoreip

/usr/bin/python /usr/bin/fail2ban-client get ssh-iptables ignoreip

/usr/bin/python /usr/bin/fail2ban-client get vsftpd-iptables ignoreip

/usr/bin/python /usr/bin/fail2ban-client set apache-badbots addignoreip 10.9.25.158/29?

/usr/bin/python /usr/bin/fail2ban-client set apache-badbots addignoreip 127.0.0.1?

/usr/bin/python /usr/bin/fail2ban-client set apache-badbots delignoreip 10.9.25.158/29

/usr/bin/python /usr/bin/fail2ban-client set apache-badbots delignoreip 192.168.145.0/24

/usr/bin/python /usr/bin/fail2ban-client set apache-tcpwrapper addignoreip 10.9.25.158/29?

/usr/bin/python /usr/bin/fail2ban-client set apache-tcpwrapper addignoreip 127.0.0.1?

/usr/bin/python /usr/bin/fail2ban-client set apache-tcpwrapper addignoreip 192.168.145.0/24?

/usr/bin/python /usr/bin/fail2ban-client set apache-tcpwrapper delignoreip 10.9.25.158/29

/usr/bin/python /usr/bin/fail2ban-client set asterisk-iptables addignoreip 10.9.25.158/29?

/usr/bin/python /usr/bin/fail2ban-client set asterisk-iptables addignoreip 127.0.0.1?

/usr/bin/python /usr/bin/fail2ban-client set asterisk-iptables addignoreip 192.168.145.0/24?

/usr/bin/python /usr/bin/fail2ban-client set asterisk-iptables delignoreip 10.9.25.158/29

/usr/bin/python /usr/bin/fail2ban-client set pbx-gui addignoreip 10.9.25.158/29?

/usr/bin/python /usr/bin/fail2ban-client set pbx-gui addignoreip 127.0.0.1?

/usr/bin/python /usr/bin/fail2ban-client set pbx-gui addignoreip 192.168.145.0/24?

/usr/bin/python /usr/bin/fail2ban-client set recidive addignoreip 10.9.25.158/29?

/usr/bin/python /usr/bin/fail2ban-client set recidive addignoreip 127.0.0.1?

/usr/bin/python /usr/bin/fail2ban-client set recidive delignoreip 10.9.25.158/29

/usr/bin/python /usr/bin/fail2ban-client set recidive delignoreip 192.168.145.0/24

/usr/bin/python /usr/bin/fail2ban-client set ssh-iptables addignoreip 10.9.25.158/29?

/usr/bin/python /usr/bin/fail2ban-client set ssh-iptables addignoreip 127.0.0.1?

/usr/bin/python /usr/bin/fail2ban-client set ssh-iptables addignoreip 192.168.145.0/24?

/usr/bin/python /usr/bin/fail2ban-client set ssh-iptables delignoreip 10.9.25.158/29

/usr/bin/python /usr/bin/fail2ban-client set ssh-iptables delignoreip 192.168.145.0/24

/usr/bin/python /usr/bin/fail2ban-client set vsftpd-iptables addignoreip 10.9.25.158/29?

/usr/bin/python /usr/bin/fail2ban-client set vsftpd-iptables addignoreip 127.0.0.1?

/usr/bin/python /usr/bin/fail2ban-client set vsftpd-iptables addignoreip 192.168.145.0/24?

/usr/bin/python /usr/bin/fail2ban-client set vsftpd-iptables delignoreip 10.9.25.158/29

/usr/bin/python /usr/bin/fail2ban-client set vsftpd-iptables delignoreip 192.168.145.0/24

/usr/bin/python /usr/bin/fail2ban-server -b -s /var/run/fail2ban/fail2ban.sock -p /var/run/fail2ban/fail2ban.pid -x```Thanks for this post. I was observing in top where fail2ban was jumping to 100% CPU every 3-5 minutes on a few deployments and was wondering what was causing that. Then after seeing this post realized IDS sync was recently turned on with those same deployments.

I am seeing the same behavior. Running a very small server (4 extensions, hardly used) and have been getting progressively increasing CPU loads up to 100%

Reboot brings it back down to normal, but over the course of a couple days it climbs to full utilization.

Thanks to this thread I am tinkering with the IDS functions and they seem to be related.

I hope a fix/solution will be found for this issue soon.

This topic was automatically closed 31 days after the last reply. New replies are no longer allowed.