We have SIP trunks through AT&T at a central location with one freePBX server(distro v15 with pjsip). We have 36 locations all with multiple phones that connect directly to our centralized FreePBX server. Each locations has whatever the local cable provider is for their internet(Spectrum, Comcast, etc).

Earlier this week we started getting locations complaining that when they had incoming calls where they could hear the caller, but the caller couldn’t hear them. Some locations are affected, some are not. It’s not every call, it’s only some calls.

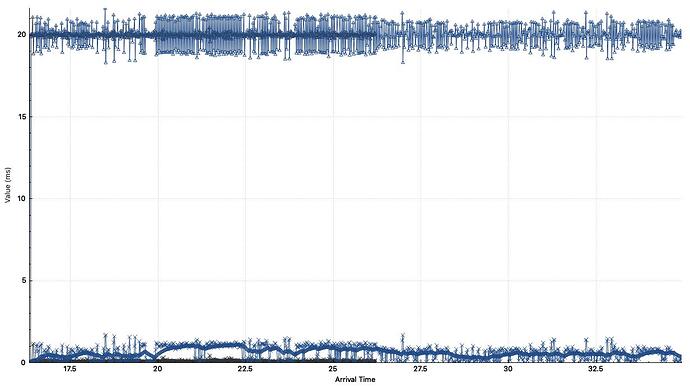

We still have a trouble ticket open with AT&T on this issue and keep providing “sample” calls that have the issue and they continue to tell us it’s due to “high jitter”. Here is a copy/paste of the jitter they told us for one of the problem ones.

Minimum Jitter(ms) 9.39

Average Jitter(ms) 314.25

Maximum Jitter(ms) 1062.69

The only noteworthy recent change is about 2 weeks ago we migrated the FreePBX server which runs on a VM from one hypervisor to another. They are both on site HP Proliant DL360 Gen10 servers, the newer one has a slightly newer CPU(Intel Xeon Silver 4210R) and SSD drives that the VM now runs on. It also has a different intel 4 port gigabit network card.

Yesterday we discovered that the dedicated port we have the FreePBX VM running on defaulted to “flow control” for RX. Using ethtool we turned off all flow control and rebooted FreePBX to make sure the change was in affect. We thought we nailed it with that, however the problem persists.

Tonight we are going to try to reboot our meraki MX100 firewall and meraki core switch as a hail mary.(the server local IP address is the same, but now has different mac address, wondering if something is cached causing issues )

Anyone have any suggestions on things to try and/or look at to get this issue resolved? AT&T makes it sound like the “high jitter” isn’t due to their service and it’s a problem on our end. I’ve never had to deal with a “jitter” problem like this so I’m a bit stumped. Thank you in advance for any suggestions you might have.

Edit: Probably worth noting that phones at our HQ location that are on the local network with the phone server are NOT having this issue. It’s only the phones connecting to our phone server over the internet that are experiencing this one way audio issue.